Touchless haptics lead adoption of mid-air gestures

As technology has advanced so has our way of interacting with it. The prosaic nature of a cellular telephone keypad from the 1980s, replicating the push-button approach of a traditional desk phone no doubt helped their initial introduction. Leap forward twenty years or so and the capacitive touch screen interface marked an evolution in how we interacted with our smartphones.

Figure 1 – touchless haptic feedback – turning an imaginary control with a mid-air gesture

Engineers have continued to stretch the boundaries of how we use with our devices, whether a smartphone, computer or oven, in order to achieve a more intuitive and natural user experience. Coupled with the development of touch screens came the concept of gestures. They are based around hand movements that have complemented other forms of human interaction, such as speech, for centuries. Gestures are now a common method of interacting with many applications using a device’s touch screen. A user will visually receive an indication that their gesture has been accepted. This is usually by changes of screen options, an audible tone and/or a tactile feedback to the finger, commonly called a haptic feedback and typically achieved by way of a slight vibration. Such feedback is a vital component of a touch interface, giving the user the confidence that their command was accepted. This approach is now commonplace across phones, gaming controls along with many other consumer, industrial and automotive applications.

The interaction method now gaining popularity is that of a touchless control. This technique allows devices to be controlled using gestures expressed in mid air, the hand position and movements being tracked by 3D sensors or more precisely a camera.

Dispensing with a touch control surface however removes the possibility of the user receiving any form of haptic feedback. To date this has been the main obstacle behind using touchless controls. Imagine however if you could feel turning a cooker knob without there being one, and getting the feeling of turning it as your fingers turn and make that gesture.

The term for this form of interaction is touchless haptic feedback. Seen as the technology to provide a tactile indication of a touchless gesture, it is the key to unlock the potential of touchless gesture recognition across a broad range of applications.

Application examples include control of an automotive infotainment system, where the driver does not need to locate and interact with physical buttons or a touch screen but can simply make appropriate gestures in the proximity of the unit, to surgical diagnostic equipment. This later example aids maintaining an infection-free environment where the transmission of bacteria via a machine’s control surface might occur.

The technology that makes this possible is called ultrahaptics. It uses ultrasound to create a mid-air haptic feedback by displacing the air thereby creating a pressure difference. With many ultrasound waves phased to arrive at the same place at the same time, a pressure difference results that is noticeable by human skin, notably the fingers as they create a mid-air gesture.

The ultrasound waves generate an acoustic radiation force that when focused onto the surface of the skin creates a shear wave in skin tissue. The resultant displacement of the skin tissue triggers the skin’s mechanoreceptors that interpret the force as a tactile or haptic sensation.

One company that has pioneered the commercialisation of touchless haptic feedback is Ultrahaptics. They have created an array of ultrasonic transducers together with proprietary signal processing algorithms that provide a complete interface solution. An ultrasound carrier frequency of 40 kHz is modulated within the perceptual frequency range of tactile feeling, this being typically in the range of 100 – 300 Hz. Optimised for hand based haptics, the haptic feedback works best on those areas of the hand that are most sensitive, namely the fingers and palms. Different tactile sensations can be created via a software API that adjusts the algorithm parameters. The API can adjust the phasing of the modulated ultrasound signal to steer the focal point to any given x, y, z coordinate position within the array boundaries. See Figure 2.

Figure 2 – Creation of a focal point on the palm using an array of transducers

In Figure 2 the right hand image highlights the focal point touching the palm with a red dot while the left hand image shows the ultrasound waves propagating towards the focal point location.

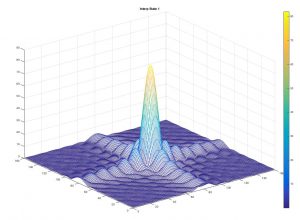

Using a 16 x 16 transducer array an acoustic field capable of delivering clear sensations in an area of the order of 160 x 160mm can be achieved. Figure 3 illustrates a simulation of this with the x and y axes corresponding to the field dimensions and the z axis corresponding to the acoustic pressure. The focal point, about the width of a finger, measures approximately 8.6 mm in diameter, this being determined by the ultrasounds 40 kHz carrier signal.

Figure 3 – Simulated acoustic energy field of single focal point generated by a 16 x 16 transducer array

To aid developers to quickly prototype and incorporate a touchless haptic feedback interface into their design Ultrahaptics supply an evaluation kit. Providing all the key components necessary, the Ultrahaptics UHEV1 kit includes a 16 x 16 transducer array, a Leap Motion gesture tracking camera, Ultrahaptics SDK and the array APIs. Supporting up to 256 transducers the actual number required by an application is dictated by the required 3D area above the array, together with the type and strength of the haptic sensation desired. A simulation model aids determining the 3D interaction space for a given array size and transducer characteristics. Note that the beamwidth of the transducers, typically 60 degrees, causes the interaction zone to extend beyond the physical dimensions of the transducer array. Also, the larger the number of transducers used in the array the greater the height the interaction zone can exist above the array. The UHEV1 kit offers an interaction zone maximum height in the range 15 to 80 cm although the greatest haptic sensation will always be generated closer to the transducer array. Changing the shape, orientation of the sensors and the overall form factor can vary the shape of the interaction zone created. Using transducers arranged in a strip, curved and angled is also possible.

The SDK that comes with the UHEV1 includes the API for creating multiple focal points and a number of pre-defined gesture and control sensations. The SDK also provides two methods of modulating the ultrasonic carrier signal the first using amplitude modulation (AM) and secondly, time point streaming emitter (TPS). AM uses the least processing power but does not permit updating the control points at a rate greater than 150 Hz. In contrast, TPS permits a significantly faster refresh rate of up to 10 kHz and gives developer control of the shape of the generated waveform.

Touchless haptic feedback promises to unleash a raft of new and intuitive interfaces for a broad range of applications. The innovation continues with research into using a two-dimensional array to create 3D interaction shapes such as a sphere, pyramid and cube. In addition Ultrahaptics is investigating suitable cover materials that provide environmental protection for the transducer array while being acoustically transparent and durable to suit the applications use cases.

By Bastien Beauvois, Business Development & Product Marketing at Ultrahaptics